Dancebits AI

Overview

Dancebits is an AI-powered platform that revolutionizes dance education by making choreography learning more accessible and efficient. Developed during the Data Science Retreat bootcamp in 2024, it addresses the common challenge of learning dance moves from video tutorials by automatically segmenting choreographies and providing real-time feedback.

Problem summary

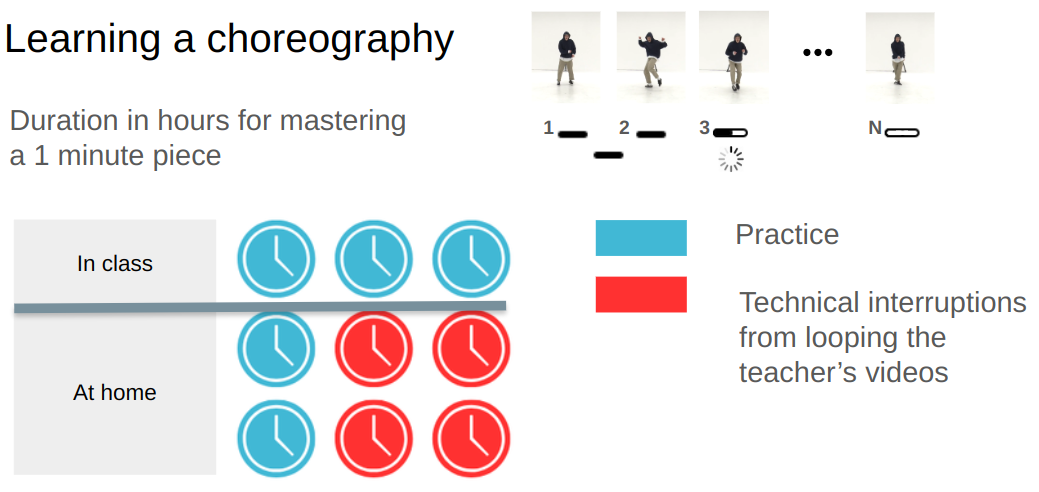

- • The process of choreography learning requires segmentation of moves and algorithmic looping through them that is of NP-hard computational complexity.

- • To stage-master a complex choreography piece of 1 minute on one's own it takes the amount of class time (3 hours), 60% of which is spent on technical difficulties of identifying and looping sections.

Technical Stack

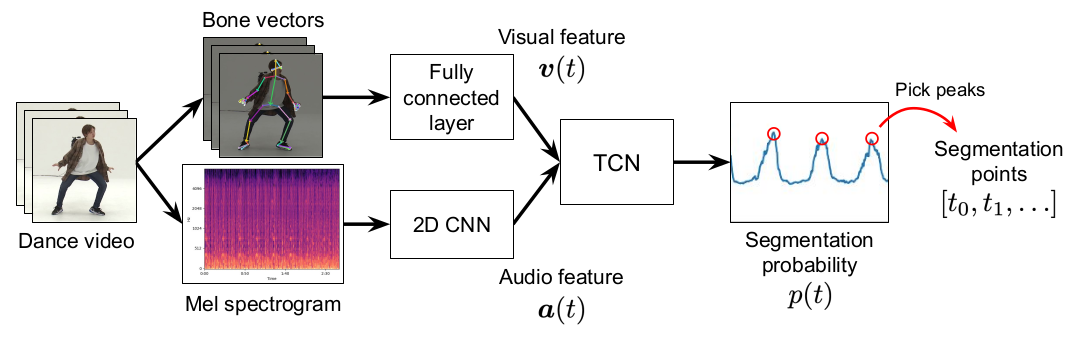

- • Neural network model combining audio and visual processing

- • Temporal convolutional networks (TCN) for movement sequence analysis

- • MediaPipe integration for pose estimation

- • Audio processing for tempo analysis and synchronization with librosa

Implementation

The core of Dancebits is built on a sophisticated AI system that processes both visual and audio elements of dance videos. The application:

- • Automatically segments choreography videos into individual moves

- • Provides interactive video controls with loop functionality

- • Implements pose comparison between teacher and student

- • Delivers performance feedback through similarity scoring

- • Features mirror mode for intuitive learning

Results

The system has demonstrated strong performance in segmenting both basic and advanced choreographies. Testing has shown particularly effective results with structured dance routines, enabling significantly more efficient practice sessions compared to traditional video learning methods. The project builds upon and extends the research by Koki Endo et al. (2024), utilizing the AIST Dance Video Database for training and validation.

Credits

This project was made possible thanks to:

- • Collaboration with the core team members Paras Mehta and Arpad Dusa

- • Training data and segmentation labels from Endo et al. (2024), based on the AIST Dance Video Database

- • Project supervision by Antonio Rueda-Toicen at Data Science Retreat

References

- [1] Endo et al. 2024, "Automatic Dance Video Segmentation for Understanding Choreography" arxiv.org/abs/2405.19727

- [2] Tsuchida et al. 2019, "AIST DANCE VIDEO DATABASE: Multi-genre, Multi-dancer, and Multi-camera Database for Dance Information Processing" aistdancedb.ongaaccel.jp